Mirror a site for offline use

More than once in my life, I’ve found myself at an airport / train station / on a plane / boat / train / car, possessing copius amounts of spare time with no internet access.

Last month, when integrating an API for payment processor while strapped to my seat thirty-thousand feet over Turkmenistan, I came to the realization that the solution I’d discovered 4 hours earlier was worth stashing away as an easy reference.

All this offline downtime could have been better spent if I had reference material to work on several languishing pet projects. If only I’d remembered to download documentation for a particular API beforehand to refer to.

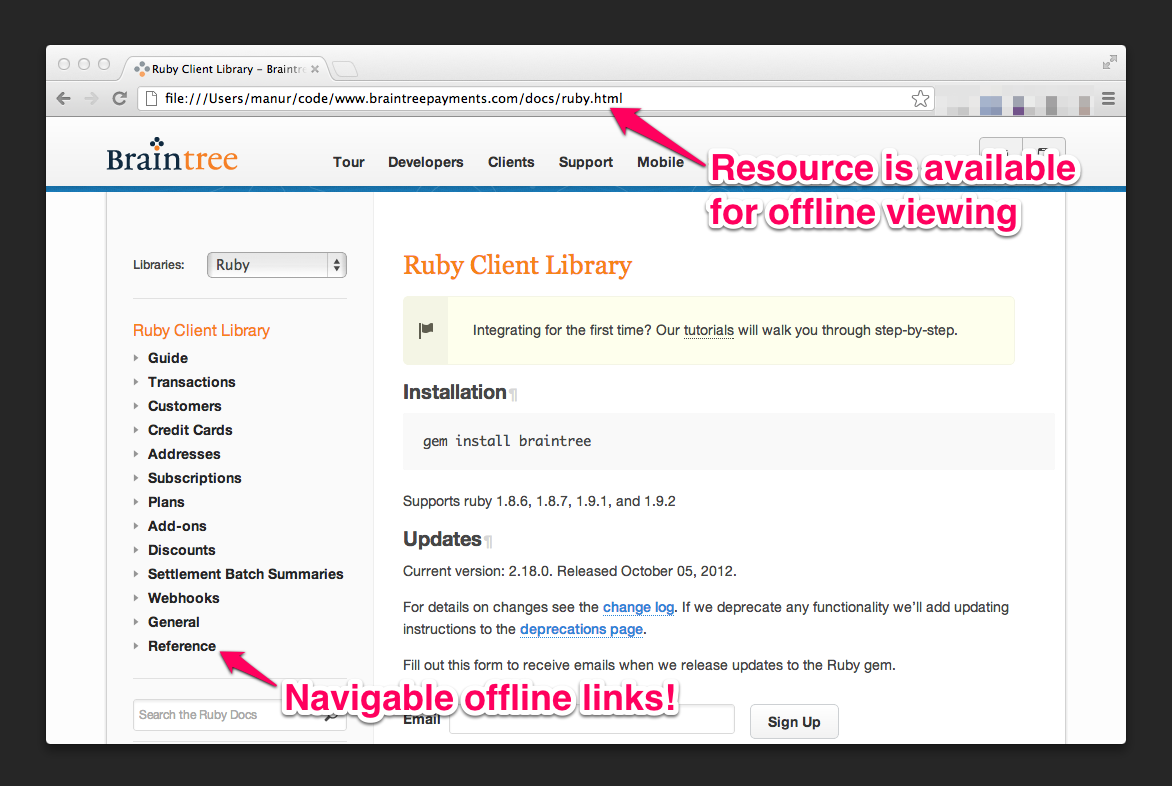

And not just one page or two, but all pages under a URI subdirectory recursively, with images and links converted for offline viewing, so there’d be no terrible discovery of blocking for large sections of time because you forgot to download one section.

The best solution I’ve found for this problem is wget‘s mirroring utility. I’m happy to report that this made working on a long flight across the world much more productive, giving me time on the ground to spend on a long-awaited reunion with friends instead of having to huddle near a WiFi hotspot to wrap up a project.

This example illustrates mirroring offline a subsection of a site.

How to use

1 | # First, instruct wget to ignore the robots.txt instrctions |

1 | # Download an entire URI subsection for offline viewing. |

Options

1 | -m # Use wget to set up a mirror, turning on recursive scraping |